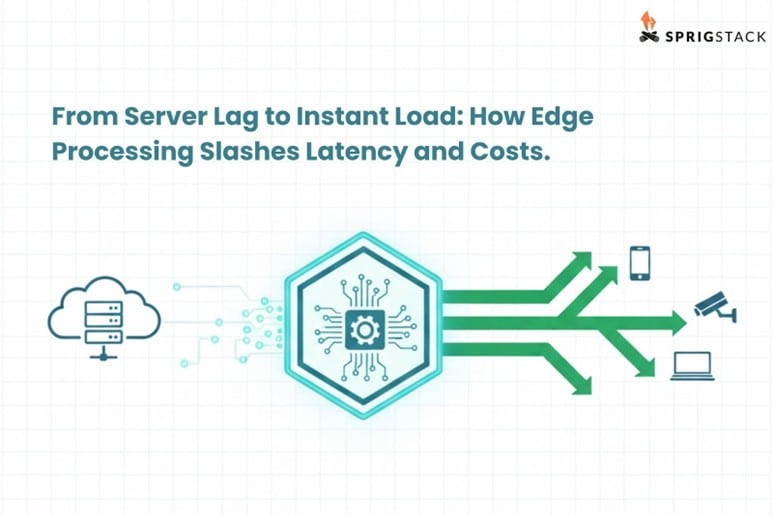

From server lag to instant load: How edge processing slashes latency and costs

Time has turned to be an invisible enemy for most businesses in today’s time. When the gap between user’s action and the system’s response moves beyond the acceptable limit, conversion turns into abandonment, satisfaction disappears and churn appears, and safety gets replaced with risks. Whether it’s a factory sensor meant to detect subtle temperature changes, a shopper pressing the “Buy Now” button, a doctor viewing live diagnostic feed, or a gamer looking forward to enjoying microsecond interaction, latency becomes the experience killer. To overcome this, businesses have continued to rely on the basic solutions— server upgrades, bandwidth enhancements, and cloud scaling. Despite these measures already in place, the outcomes aren’t quite satisfactory. And we can’t blame that because no matter how much horsepower you throw towards a distant data center, the fundamental geographical issues don’t get addressed. That’s where edge processing enters the scene, completely changing the narrative. By shifting computational power close to the data source, sometimes on the device itself, custom web development services can slash cloud bills, prevent round-trip delays, reduce infrastructure loads, and create flawless user experiences. That being said, let’s explore the hidden power of edge processing that businesses are yet to tap into.

Removing latency bottleneck present otherwise in remote cloud processing

In the traditional cloud-based infrastructure, every user action on the UI’s end— sensor update, stream request, button tap— forms an encoded data stream and is sent to remote servers. Owing to the distance, delays become inevitable. In other words, the differences in the geographical locations of the remote cloud server and the user’s device become the real enemy of real-time performance. What further worsens the lag is high network traffic or sudden congestion. The result? Applications often feel heavy and unresponsive.

Edge processing counterattacks this challenge by:

- Executing the programming logic closer to the user, which further reduces the round-trip travel from thousands of milliseconds to single digits.

- Handling high-frequency interactions locally to ensure apps can retain their responsive state, especially during traffic spikes.

- Allowing real-time decision-making at the edge, thereby cutting off the dependency on cloud server availability.

Eliminating bandwidth overload from constant round-trip

Most applications are built to push raw data continuously to the cloud in multifarious formats, including telemetry, video feeds, and sensor streams. The more data sent upstream, the higher the overall operational costs will be. To top it off, it also escalates the risks of network slowness and packet losses due to bandwidth saturation.

Owing to this, most custom web development services nowadays rely on the underlying power of edge processing. Here’s how it can reduce infrastructural expenses in no time.

- Only essential data streams are sent to the cloud server via local filtering, compressing, and aggregating.

- Events are processed on the device or at local gateways to eliminate redundant uploads.

- Data-heavy workflows, like IoT systems or on-premise operations, are optimized further to reduce bandwidth consumption by about 60%.

Slashing cloud costs that escalate with every request

Businesses have to pay quite a high cloud bill as it’s directly proportional to the total number of compute cycles, API calls, and bytes transferred. When we consider high-traffic platforms like IoT networks, consumer marketplaces, or media apps, the monthly cloud invoices often cause a budget overrun. As a result, financial management becomes way more unpredictable and unsustainable for the dev teams.

Now enters edge processing in the picture— bringing down the unnecessary request-based expenses by:

- Offloading lightweight and repetitive tasks from the otherwise expensive centralized computational systems.

- Cutting down API hits by enabling local validations, routing, caching, and transformations.

- Stabilizing cloud consumption patterns so that businesses can scale their user traffic without scaling cloud charges equivalently.

Maintaining response speed even during traffic surges and peak load times

Centralized servers are known for their rigidity. Owing to this, exposure to high-traffic moments— real-time dashboards, flash sales, authentication bursts, or streaming spikes— can cause them to choke. With more users demanding the same backend resources for computation, queues begin to grow. The result? Latency increases beyond the limit, and application performance starts collapsing under load.

Edge processing brings the goodness of local computing to ensure the response time doesn’t surpass the pre-determined limit. Here’s how.

- It handles high-volume, immediate tasks near users, thereby maintaining stability in the core cloud network.

- Workload gets distributed across the edge nodes, thereby preventing sudden central server overload.

- Applications can deliver real-time responses during peak loads without requiring autoscaling of the cloud networks.

Balances heavy data transfers that often increase infrastructure stress

Whether it’s the images, logs, or analytical data, applications moving these humongous datasets often put excessive stress on both the storage and network infrastructures. Owing to this, teams are forced to scale storage buckets, databases, and compute clusters to ensure the systems can continue to match the pace of continuous ingestion. However, the maintenance costs and lag between user requests and system responses cannot be tackled smartly.

That’s why most custom web development services nowadays leverage edge computing:

- To process data locally so that storage utilization and computational demand within the cloud’s core infrastructure can be minimized.

- To send only structured, enriched, or anomaly-flagged insights upstream.

- To reduce ingestion frequency and volume so that the scaling requirements can be eliminated from the picture.

Mitigating the risks of centralized failure points

The moment computation cycles rely on a single cloud cluster or region, any outage will affect user experiences across the world. It can range from a minor disruption to complete server failure. Regardless of the cause, there will be a massive downtime and humongous revenue loss. That’s why relying on edge processing has become more important than ever. Here’s why.

- It allows apps to operate independently of cloud network availability, especially during network disruptions.

- Computation becomes decentralized, thereby ensuring failure in one region won’t cascade to others.

- Teams can build fault-tolerant architectures so that users continue to receive services from the nearby nodes.

Conclusion

Edge processing isn’t just a mere performance optimization approach— rather, it’s an architectural shift, potent enough to slash cloud costs, reduce latency, stabilize global performance, and eliminate infrastructural inefficiencies. As the crucial tasks are handled near the user, custom web development services won’t have to depend heavily on long-distance data travel and centralized server bottlenecks.